|

|

4 years ago | |

|---|---|---|

| Chat server and client | 5 years ago | |

| Clickbait bot | 5 years ago | |

| Scraping and Filtering News | 5 years ago | |

| Touch 2tap | 5 years ago | |

| Touch panup | 5 years ago | |

| UI-guidelines | 4 years ago | |

| README.md | 4 years ago | |

README.md

Hackpact

Rules

- develop and execute small scripts related to your research topic

- no more of 45 minutes for each experiment

- post the premise, outcome and process of the experiment

- embrace loss, confusion, dilettance, and serendipity

Research topic

- persuasive interfaces

- user interfaces

- social media interfaces

Experiments

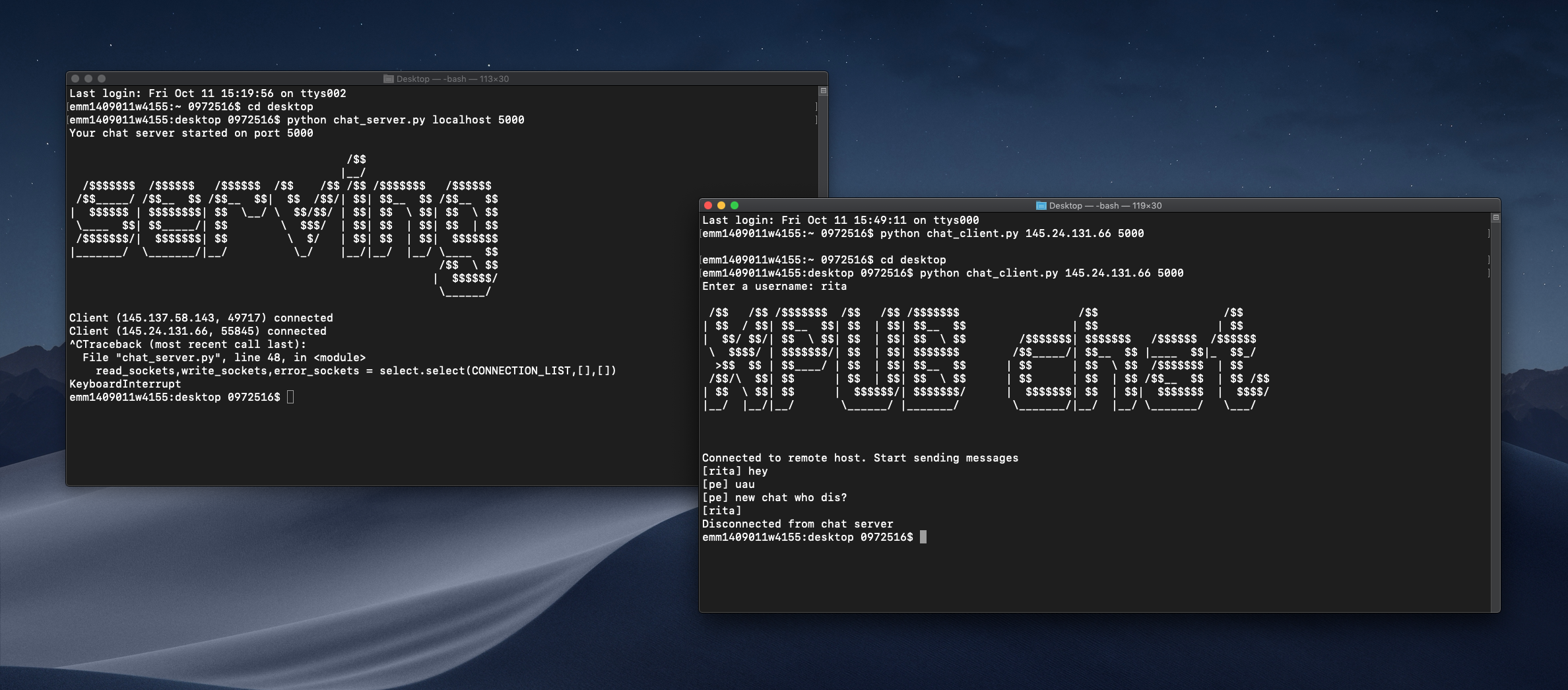

Chat server and client

Field notes: An important feature of any social media is the chat interface, so I wanted to explore it in more depth. I created a server and a client script. This was very interesting for me. I would like to work further.

Clickbait bot

One of the persuasion tactics used by users is clickbait.

“Clickbait is a form of false advertisement which uses hyperlink text or a thumbnail link that is designed to attract attention and entice users to follow that link and read, view, or listen to the linked piece of online content, with a defining characteristic of being deceptive, typically sensationalized or misleading [...]”

I used the scripts from the Eliza bot experiments we made last year with Michael to do a clickbait generator. Field notes: The Eliza folder I have is missing a python script, needed for the makefile script. The bot doesn’t read numbers or characters such as??!!!!!!. Which are quite essential when doing clickbait titles. It’s probably something with the UTF-8 encoding but I couldn’t figure it out.

Scraping and Filtering News

While researching about persuasive interfaces I came across the Facebook news feed experiment in 2004. “It has published details of a vast experiment in which it manipulated information posted on 689,000 users' home pages and found it could make people feel more positive or negative through a process of "emotional contagion”.” https://www.theguardian.com/technology/2014/jun/29/facebook-users-emotions-news-feeds The fact that what we see online influences us so much made me want to explore it more. For this day I wanted to scrape news headlines and make my filter bubble.

I made a script that scrapes news headlines from The Washington Post website and writes them on a text file; Then another script sees if any of this news have the word “Trump” (my filter word). I write an HTML file with this news. I make myself only read Trump news forever. Field notes: I could improve the last script by splitting words from punctuation (so Trump's count as Trump) but I need to improve my timing. I’m usually going over the 45-minute mark. Remember that I’m supposed to accept “embrace loss, confusion, dilettante, and serendipity”.

Touch 2tap

One of my topics of interest is how technology spreads its influence outside interfaces, changing our gestures and language. I think it would be interesting to explore omnipresent expressions of technology in our bodies.

To begin with, I will choose one hand gesture and make a small visual experience. If I continue with these, it would be nice to put them all together. You would toggle the visuals with gestures that you do every day when using technology, no instructions besides the embodied knowledge. Field notes: I wanted to use touch and not to be restricted to the keyboard, as the touch reflects the proximity we have with our devices. First I was using Kivy, a Python library because it allowed me to work with multi-touch. Michael made me realise it was too complex for the small prototype I was aiming for so I moved to javascript.

I spent way more than 45 minutes as I had to search for js libraries that recognised touch. I chose Hammer library for the prototype. Gesture: Double-tap Outcome: Simple gradient change when the user double-taps the screen.

Touch panup

Field notes: This time it was faster because I knew the library better. It’s hard to prototype touch gestures because my computer doesn’t have touch so I need to be always putting online the script to try it on my phone.

I forget often the command to do it:

python -m SimpleHTTPServer 8000

Gesture: Pan up Outcome: Simple gradient change when the user pans up the screen.

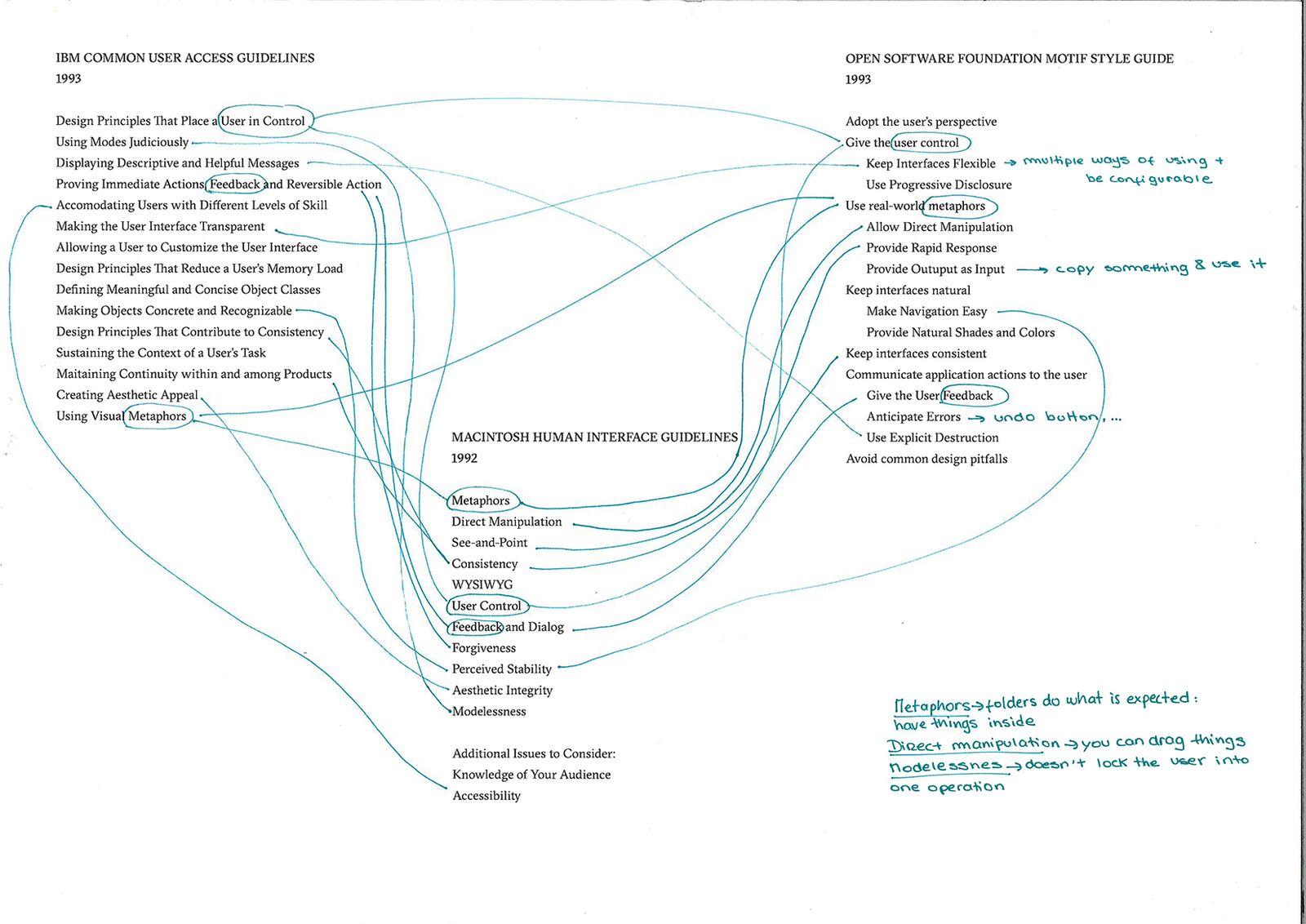

Comparing UI guidelines

After seeing the diagram above comparing Apple’s guidelines over time, I thought it would be interesting to explore and draw comparations between more companies.